They trained algorithms to transfer the brain patterns into sentences in real-time and with word error rates as low as 3%.

Previously, these so-called "brain-machine interfaces" have had limited success in decoding neural activity.

The study is published in the journal Nature Neuroscience.

The earlier efforts in this area were only able decode fragments of spoken words or a small percentage of the words contained in particular phrases.

Machine learning specialist Dr Joseph Makin from the University of California, San Francisco (UCSF), US, and colleagues tried to improve the accuracy.

Four volunteers read sentences aloud while electrodes recorded their brain activity.

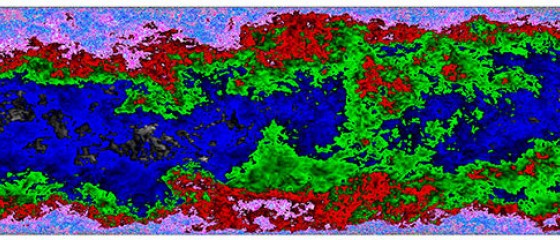

The brain activity was fed into a computing system, which created a representation of regularly occurring features in that data.

These patterns are likely to be related to repeated features of speech such as vowels, consonants or commands to parts of the mouth.

Another part of the system decoded this representation word-by-word to form sentences.

However, the authors freely admit the study's caveats. For example, the speech to be decoded was limited to 30-50 sentences.

"Although we should like the decoder to learn and exploit the regularities of the language, it remains to show how many data would be required to expand from our tiny languages to a more general form of English," the researchers wrote in their Nature Neuroscience paper.

But they add that the decoder is not simply classifying sentences based on their structure. They know this because its performance was improved by adding sentences to the training set that were not used in the tests themselves.

The scientists say this proves that the machine interface is identifying single words, not just sentences. In principle, this means it could be possible to decode sentences never encountered in a training set.

When the computer system was trained on brain activity and speech from one person before training on another volunteer, decoding results improved, suggesting that the technique may be transferable across people.